Data Engineering Services for AI projects

NEUROSYS in numbers

NEUROSYS Data Engineering services

Data Platform design

We provide the architectural design for a data platform depending on your required use cases, leaving the design flexible enough to support changing requirements and new use cases in the future.

We design the platform from both the hardware and software viewpoints, considering the performance the underlying hardware can provide and the workloads that the software has to support. The technological landscape is in constant development as new hardware, cloud services and technologies open the doors for performance increases and completely new use-cases.

Data platform engineering

Our experienced data engineers are familiar with the technologies widely used in the cloud and on-premise data architectures.

We can realize a data architecture from scratch or develop additional capabilities to an existing data platform, such as data storage layer developments (data lakes and warehouses), data pipelining (ETL jobs or complex batch processing of data) or analytical components (BI tools and AI model deployment).

Data integration

Businesses often collect data in various distinct locations and technologies, such as on-premise relational databases, CRM tools, analytics tools, object storage and so on.

This may work well for operational tasks, but to develop analytics tools and AI models to generate insight from these data, it’s often necessary to integrate said data to a common platform where analytical and operational workloads can be kept separate and the data from various sources used together.

We work with our partners to understand the nature of said data, develop an integration strategy and realize this in either a new architecture or in an existing one.

Data storage layer design

Different data and different use cases require different storage technologies. Whether it’s structured data that should be kept in a data warehouse in columnar format or unstructured data like images, video and audio, which is better kept in a data lake.

We design the appropriate storage system with fast interconnects that enable the analytics tools to access the data efficiently, providing the required performance.

Data pipeline development

Data pipelines are used for ETL jobs, and batch processing of data in analytics and machine learning workloads.

Good data pipelines are performant, robust and lend themselves well to monitoring and extending when requirements change.

Big data

The term itself is loosely defined, but quite clear from the perspective of the challenge – handling big data requires different, and far more complex, tools from small data.

At NEUROSYS we’ve had to deal with all kinds of data and have the experience to know when using the more complex toolset is merited and worth the extra cost in development and maintenance.

And if you really need it, we can help you make the right choices and build the system that helps you solve your problems.

NEUROSYS Data Engineering services

Data Platform design

We provide the architectural design for a data platform depending on your required use cases, leaving the design flexible enough to support changing requirements and new use cases in the future.

Data platform engineering

Our experienced data engineers are familiar with the technologies widely used in the cloud and on-premise data architectures.

Data integration

Businesses often collect data in various distinct locations and technologies, such as on-premise relational databases, CRM tools, analytics tools, object storage and so on.

Data storage layer design

Different data and different use cases require different storage technologies.

Data pipeline development

Data pipelines are used for ETL jobs, and batch processing of data in analytics and machine learning workloads.

Big data

The term itself is loosely defined, but quite clear from the perspective of the challenge – handling big data requires different, and far more complex.

Data Engineering Processing Services

- Data Ingestion

- Data storage

- Data processing

Data Ingestion

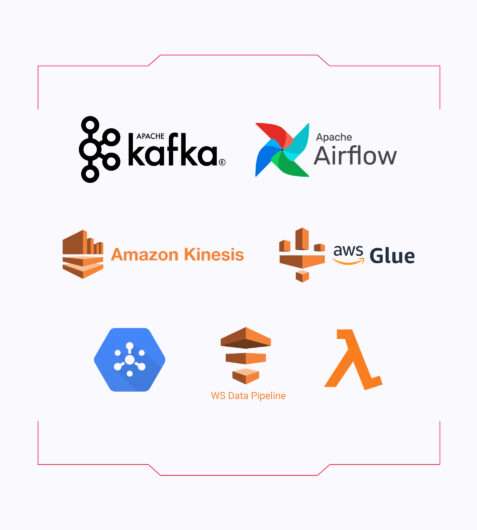

We use Kafka, Kinesis, Pub/Sub or similar for data ingestion and initial processing.

For simpler workflows like batch processing, we use data pipelining tools such as Apache Airflow and different data source connectors or cloud platform tools such as AWS Glue, Lambda and Data Pipeline.

Data Storage

We use Kafka, Kinesis, Pub/Sub or similar for data ingestion and initial processing.

For simpler workflows like batch processing, we use data pipelining tools such as Apache Airflow and different data source connectors or cloud platform tools such as AWS Glue, Lambda and Data Pipeline.

Data processing

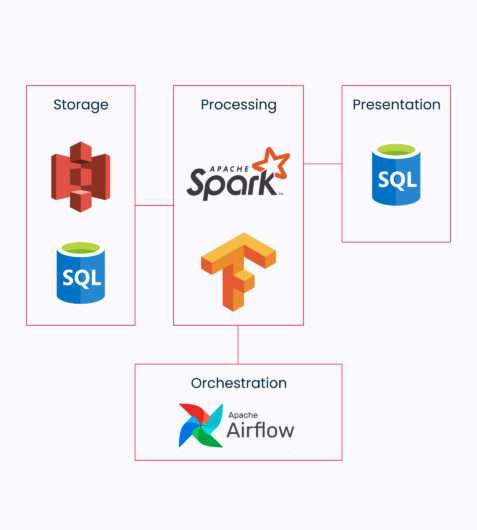

For orchestration Apache Airflow on-premise and AWS lambda with event triggers or GCP Dataflow are our tools of choice.

The processing itself is handled by various tools from Spark for big data to Tensorflow for deep neural networks.

We work mainly in the Python and Linux ecosystems and have extensive experience with relevant tools.

How we work?

-

Mapping the problem

Working closely with your company, we will identify the key areas where AI can bring the most value. This involves meetings with all stakeholders and developing a roadmap for action together.

-

Familiarization with existing infrastructure

We analyze the infrastructure that you currently use and make a plan on how to utilize this for the current goals or how to extend this to accommodate the new requirements.

-

Proposal for deliverables

Based on our meetings and data analysis, we’ll share with you the possible solutions. We will work hand in hand to agree on the desired outcome.

-

Development in a test environment

Your data is valuable, so the tools processing it must be checked to avoid data corruption or loss. Before deployment, we set up everything in a test environment and ensure everything works end to end.

-

Deployment to work with live data

When the system has passed all required tests we can deploy it to live environment. Monitoring will, of course, still be set up to notify us about any inconsistencies before they turn into problems.

-

Maintenance

All systems require maintenance from time to time and data processing can be especially sensitive in this case, as data changes over time along with your organization and your customers. We are happy to support our customers with ensuring the performance of the system..

Build Your First Dynamic AI Agent In Just A Few Clicks!

INDUSTRY EXPERIENCE

Industries We Serve

Manufacture

Education & E-Learning

Services

IT SERVICES

Related Services We Offer

AWS DevOps

Power BI

Power Apps